Introduction

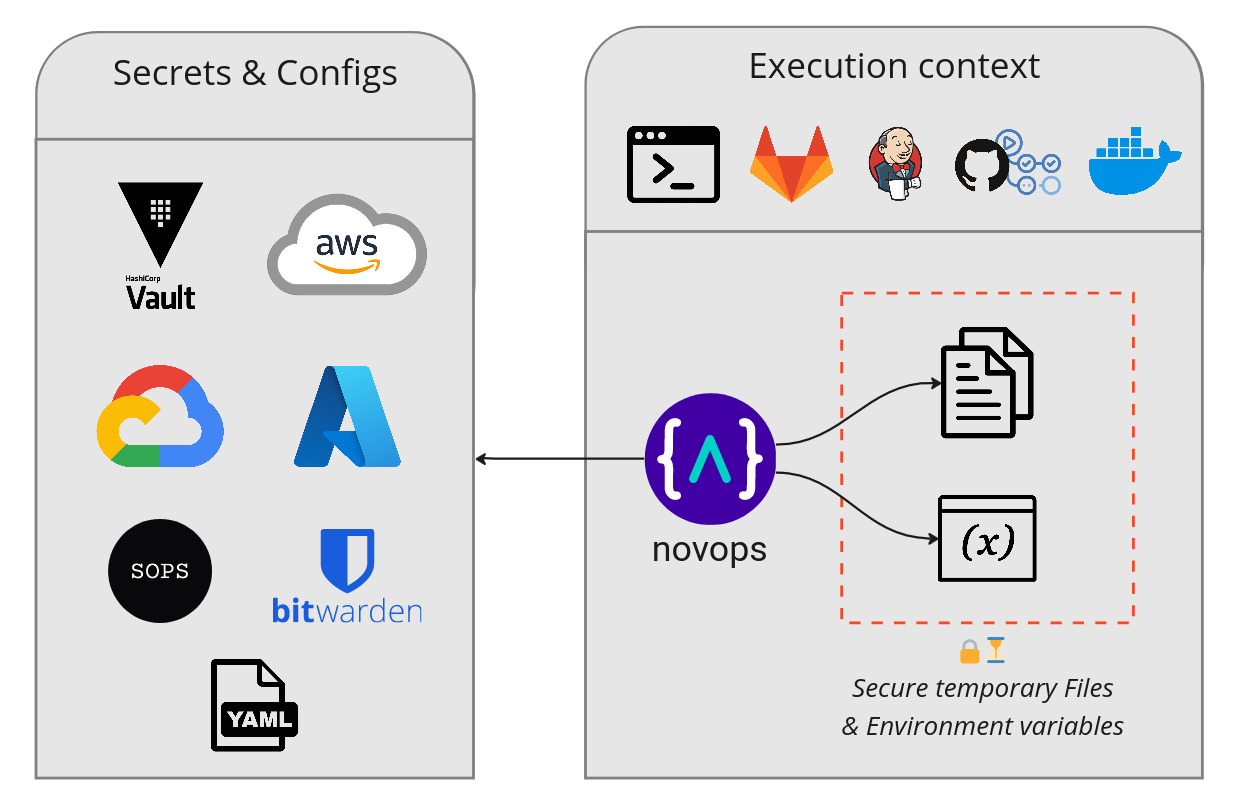

Novops, the universal secret and configuration manager for development, applications and CI.

- Features

- Getting Started

- 🔐 Security

- Why Novops?

- How is Novops different than other secret management tools?

Features

- Securely load secrets in protected in-memory files and environment variables

- Generate temporary credentials and secrets

- Fetch secrets from anywhere: Hashicorp Vault, AWS, Google Cloud, Azure, SOPS and more. Avoid syncing secrets between local tool, CI/CD, and Cloud secret services.

- Feed secrets directly to command or process with

novops run, easing usage of tools like Terraform, Pulumi, Ansible... - Manage multiple environments:

dev,preprod,prod... and configure them as you need. - Easy installation with fully static binary or Nix

Getting Started

🔐 Security

Secrets are loaded temporarily as environment variables or in a protected tmpfs directory and kept only for as long as they are needed.. See Novops Security Model for details

Why Novops?

Novops help manage secrets and configurations to avoid keeping them (often insecurely) in gitignored folders, forgotten on your machine and spread around CI and server configs.

See Why Novops? for a detailed explanation and history.

How is Novops different than other secret management tools?

- Universal: unlike platform-specific tools like

aws-vault, Novops is designed to be versatile and flexible, meeting a wide range of secret management needs across different platforms and tools. - Free and Open Source, Novops is not trying to sell you a platform or subscription.

- Generate temporary credentials for Clouders like AWS, where most tools only manage static key/value secrets.

- Manages multi-environment natively without requiring complex setup like

teller. - Fetch secrets from source avoiding need for syncing manually with some encrypted file like.

Installation

Novops is distributed as a standalone static binary. No dependencies are required.

Automated installation (Linux, MacOS and Windows with WSL)

Run command:

sh -c "$(curl --location https://raw.githubusercontent.com/PierreBeucher/novops/main/install.sh)"

Install script will take care of downloading latest Novops version, verify checksum and make it available on PATH.

Manual installation

Linux

Download latest Novops binary latest version:

# x86-64

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops_linux_x86_64.zip" -o novops.zip

# arm64

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops_linux_aarch64.zip" -o novops.zip

Or specific version:

NOVOPS_VERSION=v0.12.0

# x86-64

curl -L "https://github.com/PierreBeucher/novops/releases/download/${NOVOPS_VERSION}/novops_linux_x86_64.zip" -o novops.zip

# arm64

curl -L "https://github.com/PierreBeucher/novops/releases/download/${NOVOPS_VERSION}/novops_linux_aarch64.zip" -o novops.zip

Install it:

unzip novops.zip

sudo mv novops /usr/local/bin/novops

Check it works:

novops --version

MacOS (Darwin)

Download latest Novops binary latest version:

# x86-64

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops_macos_x86_64.zip" -o novops.zip

# arm64

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops_macos_aarch64.zip" -o novops.zip

Or specific version:

NOVOPS_VERSION=v0.12.0

# x86-64

curl -L "https://github.com/PierreBeucher/novops/releases/download/${NOVOPS_VERSION}/novops_macos_x86_64.zip" -o novops.zip

# arm64

curl -L "https://github.com/PierreBeucher/novops/releases/download/${NOVOPS_VERSION}/novops_macos_aarch64.zip" -o novops.zip

Install it:

unzip novops.zip

sudo mv novops /usr/local/bin/novops

Check it works:

novops --version

Windows

Use WSL and follow Linux installation.

Arch Linux

Available in the AUR (Arch User Repository)

yay -S novops-git

Nix

Use a flake.nix such as:

{

description = "Example Flake using Novops";

# Optional: use Cachix cache to avoid re-building Novops

nixConfig = {

extra-substituters = [

"https://novops.cachix.org"

];

extra-trusted-public-keys = [

"novops.cachix.org-1:xm1fF2MoVYRmg89wqgQlM15u+2bk0LBfVktN9EgDaHY="

];

};

inputs = {

novops.url = "github:PierreBeucher/novops"; # Add novops input

flake-utils.url = "github:numtide/flake-utils";

};

outputs = { self, nixpkgs, novops, flake-utils }:

flake-utils.lib.eachDefaultSystem (system:

let

pkgs = nixpkgs.legacyPackages.${system};

novopsPackage = novops.packages.${system}.novops;

in {

devShells = {

default = pkgs.mkShell {

packages = [

novopsPackage # Include Novops package in your shell

];

shellHook = ''

# Run novops on shel startup

novops load -s .envrc && source .envrc

'';

};

};

}

);

}

Direct binary download

See GithHub releases to download binaries directly.

Build from source

See Development and contribution guide to build from source.

Updating

To update Novops, replace binary with a new one following installation steps above.

Getting started

- Install

- Usage

- 🔐 Security

- Run Novops with...

- Load and generate temporary secrets

- Multi-environment context

- Files

- Plain strings

- Next steps

Install

sh -c "$(curl --location https://raw.githubusercontent.com/PierreBeucher/novops/main/install.sh)"

See installation for more installation methods.

Usage

Consider a typical workflow: run build and deployment with secrets from Hashicorp Vault and temporary AWS credentials.

Create .novops.yml and commit it safely - it does not contain any secret:

environments:

dev:

# Environment variables for dev environment

variables:

# Fetch Hashicorp Vault secrets

- name: DATABASE_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: db_password

# Plain string are also supported

- name: DATABASE_USER

value: root

# Generate temporary AWS credentials for IAM Role

# Provide environment variables:

# - AWS_ACCESS_KEY_ID

# - AWS_SECRET_ACCESS_KEY

# - AWS_SESSION_TOKEN

aws:

assume_role:

role_arn: arn:aws:iam::12345678910:role/dev_deploy

Load secrets as environment variables:

# Source directly into your shell

source <(novops load)

# Or run sub-process directly

novops run -- make deploy

Secrets are now available:

echo $DATABASE_PASSWORD

# passxxxxxxx

env | grep AWS

# AWS_ACCESS_KEY_ID=AKIAXXX

# AWS_SECRET_ACCESS_KEY=xxx

# AWS_SESSION_TOKEN=xxx

🔐 Security

Secrets are loaded temporarily as environment variables or in a protected tmpfs directory and kept only for as long as they are needed. See Novops Security Model for details

Run Novops with...

Shell

Either source directly into your shell or run a sub-process:

# bash / ksh: source with process substitution

source <(novops load)

# zsh: source with process substitution

source =(novops load)

# Run sub-process directly

novops run -- some_command

# load in .env file (novops creates a symlink pointing to secure temporary file)

novops load -s .envrc && source .envrc

🐳 Docker & Podman

Load environment variables directly into containers:

docker run -it --env-file <(novops load -f dotenv -e dev) alpine sh

podman run -it --env-file <(novops load -f dotenv -e dev) alpine sh

More examples

- Shell

- Docker & Podman

- Nix

- CI / CD

- Infra as Code

Load and generate temporary secrets

Novops load and generate temporary secrets from various platforms and providers as configured in .novops.yml.

Hashicorp Vault

Multiple Hashicorp Vault Secret Engines are supported:

- Key Value v1/v2

- AWS to generate temporary credentials

environments:

dev:

variables:

# Key Value v2

- name: DATABASE_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: db_password

# Key Value v1

- name: SECRET_TOKEN

value:

hvault_kv1:

path: crafteo/app/dev

key: token

mount: kv1

# Hashivault module with AWS secret engine

# Generate environment variables:

# - AWS_ACCESS_KEY_ID

# - AWS_SECRET_ACCESS_KEY

# - AWS_SESSION_TOKEN

hashivault:

aws:

name: dev_role

role_arn: arn:aws:iam::111122223333:role/dev_role

role_session_name: dev-session

ttl: 2h

AWS

Multiple AWS services are supported:

- Secrets Manager

- STS Assume Role for temporary IAM Role credentials

- SSM Parameter Store

environments:

dev:

variables:

# SSM Parameter Store

- name: SOME_PARAMETER_STORE_SECRET

value:

aws_ssm_parameter:

name: secret-parameter

# Secrets Manager

- name: SOME_SECRET_MANAGER_PASSWORD

value:

aws_secret:

id: secret-password

# Generate temporary AWS credentials for IAM Role

# Generate environment variables:

# - AWS_ACCESS_KEY_ID

# - AWS_SECRET_ACCESS_KEY

# - AWS_SESSION_TOKEN

aws:

assume_role:

role_arn: arn:aws:iam::12345678910:role/dev_deploy

See AWS doc

More examples

- Hashicorp Vault

- Key Value v1/v2

- AWS temporary credentials

- AWS

- Secrets Manager

- STS Assume Role for temporary IAM credentials

- SSM Parameter Store

- Google Cloud

- Secret Manager

- Azure

- Key Vault

- BitWarden

Multi-environment context

.novops.yml can be configure with multiple environments:

environments:

dev:

variables:

- name: DATABASE_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: db_password

prod:

variables:

- name: DATABASE_PASSWORD

value:

hvault_kv2:

path: crafteo/app/prod

key: db_password

Novops will prompt for environment by default

novops load

# Select environment: dev, prod

You can also specify environment on command line

novops load -e dev

Or specify a default environment in .novops.yml

config:

default:

environment: dev

Files

Novops can also write files such as SSH keys. Files are kept in a tmpfs secured directory, see Novops Security Model.

environments:

dev:

files:

# Each file entry generates a file AND an environment variable

# pointing to generated file such as

# ANSIBLE_PRIVATE_KEY=/run/user/1000/novops/.../file_ANSIBLE_PRIVATE_KEY

- variable: ANSIBLE_PRIVATE_KEY

content:

hvault_kv2:

path: crafteo/app/dev

key: ssh_key

Plain strings

Variables and files can also be loaded as plain strings. This can be useful to specify both user and passwords or some generic configs.

environments:

dev:

variables:

# Plain string will be loaded as DATABASE_USER="app-dev"

- name: DATABASE_USER

value: app-dev

- name: DATABASE_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: db_password

files:

# File with plain string content

- variable: APP_CONFIG

content: |

db_host: localhost

db_port: 3306

Next steps

- See Novops configuration details and modules

- Novops Security Model

- Checkout Advanced examples and Use Cases

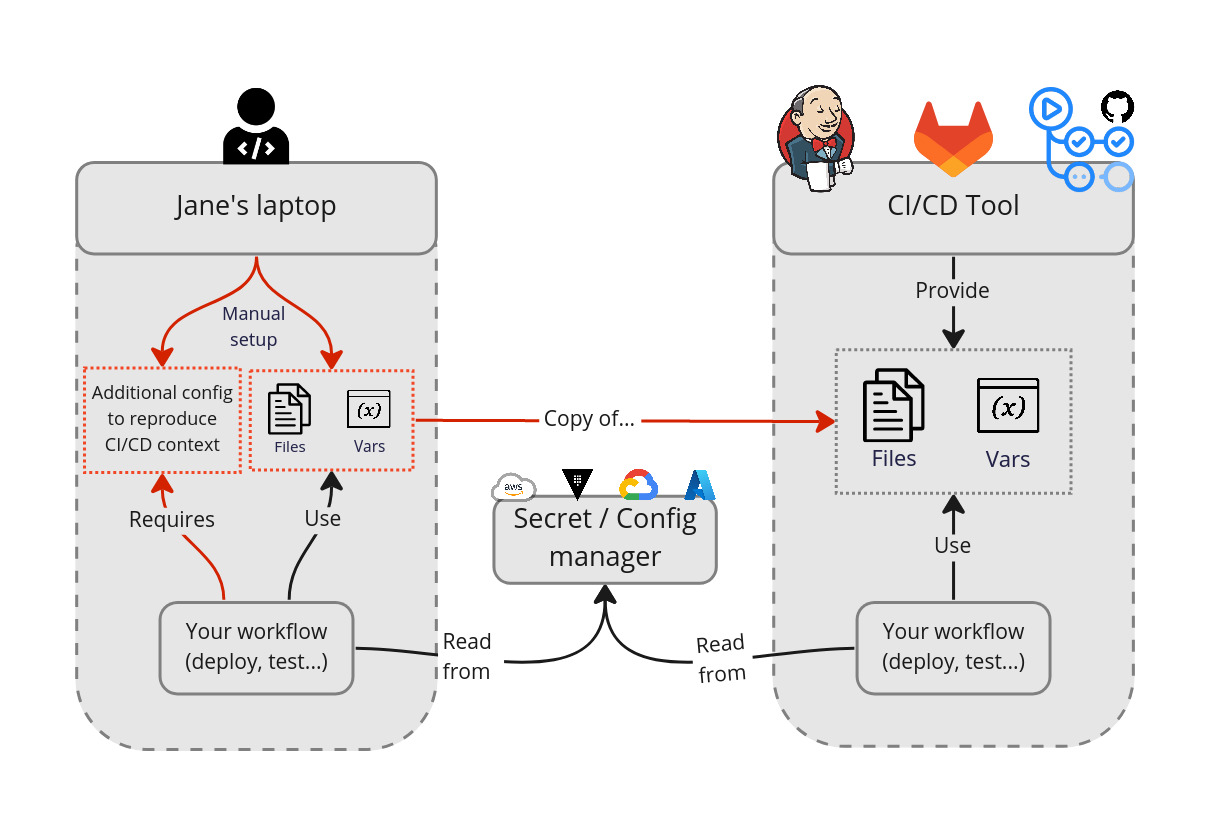

Why Novops ?

Secrets are often mishandled in development environments, production procedure and CI pipelines:

- Kept locally under git-ignored directories and forgotten about

- Kept under unsafe directories (world or group readable)

- Accessible directly or indirectly by way too much people in your CI server config

They're also hard to manager, developers ending-up with poor experience when they need to configure their projects for multi-environment workload:

- Hours are spent by your team hand-picking secrets from secret manager and switching between dev/prod and other environment configs

- You're not able to reproduce locally what happens on CI as per all the variables and secrets configured, spending hours debugging CI problems

- Even with Docker, Nix, GitPod or other tools providing a reproducible environment, you still have significant drift because of environment variables and config files

- Your developers want to access Production and sensible environments but they're locked out as per lack of possibility to provide scoped, temporary and secure credentials

With and without Novops

Consider a typical Infra as Code project:

- Deployment tool such as Terraform, Ansible or Pulumi

- CI/CD with GitLab CI, GitHub Action or Jenkins

- Multiple environments (dev, prod...)

Secrets are managed by either:

- A secret / config manager like Hashicorp Vault or AWS Secret Manager

- Vendor-specific CI/CD secret storage provided as environment variables or files

- Secrets stored locally on developer machines, often synced manually from one of the above

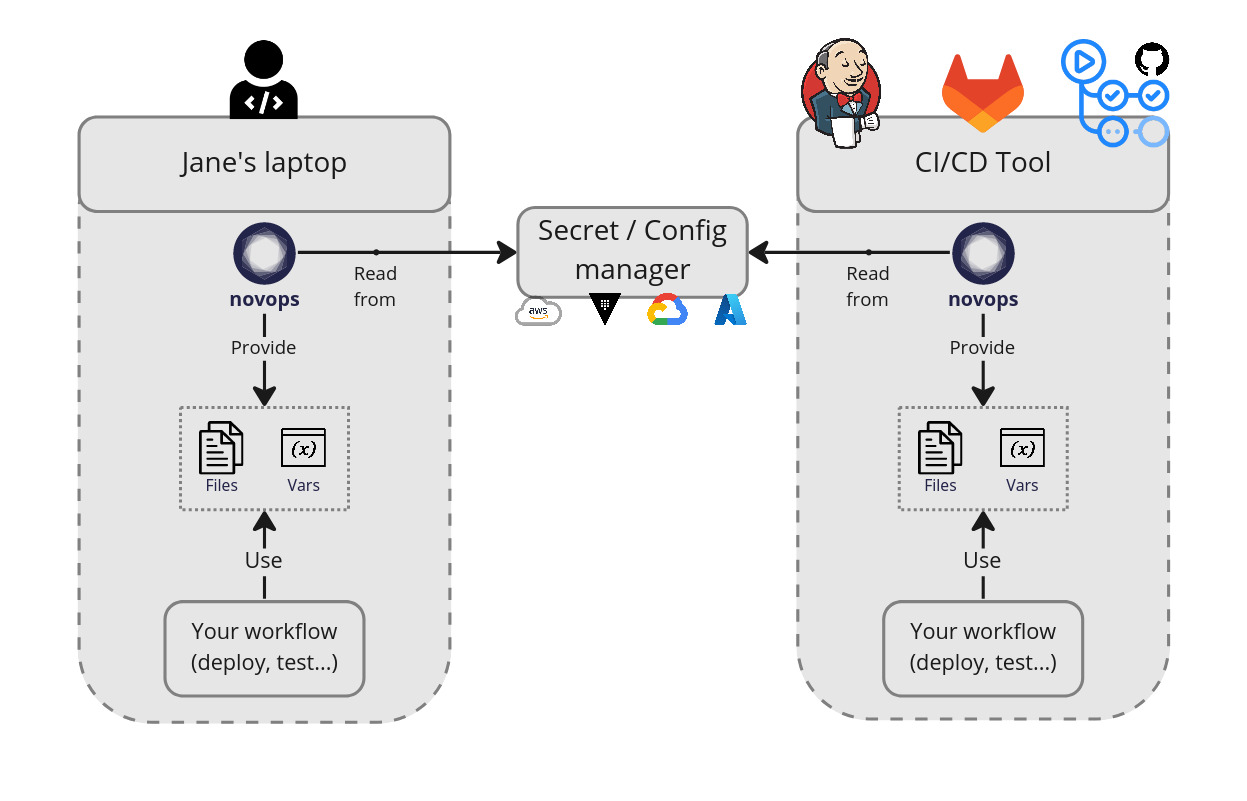

Novops allow your team to manage secrets & configs from a single Git-versioned file. Reproducing CI/CD context locally becomes easier and more secure:

- Files and environment variables are loaded from the same source of truth

- Secrets are stored securely and can be cleaned-up easily

- It's then easy to reproduce the same context locally and on CI/CD

Novops Security Model

- Overview

- Novops security added value

- Temporary secrets and secure directories

- Limitations

- External libraries and CVEs

Overview

Novops does its best to load secrets securely, but some points must be considered. In short:

- Novops ensures secrets can't be read by another user and won't be persisted by storing them directly in-memory or under secure temporary directories.

- Novops itself does not persist any secret.

.novops.ymlconfig file does not contain any secret and can be safely versionned with Git or version control tool. - Libraries used are carefully chosen and regularly updated.

Novops security added value

Secrets are often mishandled during local development and using CI: stored permanently under git-ignored directories, $HOME/... sub-folders, spread across CI servers config...

Such manual secret management is risky even done with best practices in mind. Novops help handle secrets more securely during local development and on CI along with Secret Managers like Hashicorp Vault or Cloud secret managers.

Temporary secrets and secure directories

Novops generate secrets as environment variables and files to be used by sub-processes. Secret files are written to a tmpfs file system (in-memory file system) under a protected directory only the user running Novops (or root) can access (XDG_RUNTIME_DIR by default or a protected directory in /tmp).

In short:

- If

XDG_RUNTIME_DIRexists, Novops will save files in this secure directory - Otherwise files are saved under a user-specific

/tmpdirectory - Alternatively you can specify

novops load -w PATHto point to a custom secure directory, though you're responsible to ensure usage of secure directory (only your user can read/write and should not be persisted)

Files potentially generated by Novops:

novops load -s SYMLINKcreates an exportabledotenvfile in protected directory- The

filesmodule generate files in protected directory by default - Environment variables for processes are stored under

/proc/${pid}/environ

This offers a better protection than keeping secrets directly on-disk or manually managing them.

With XDG_RUNTIME_DIR

If XDG_RUNTIME_DIR variable is set, secrets are stored as files under a subdirectory of XDG_RUNTIME_DIR. In short, this directory is:

- Owned and read/writable only by current user

- Bound to lifecycle of current user session (i.e. removed on logout or shutdown)

- Usually mounted as a

tmpfsvolume, but not necessarily (spec do not mention it)

This ensures loaded secrets are securely stored while being used and not persisted unnecessarily.

To read more about XDG Runtime Dir, see:

Without XDG_RUNTIME_DIR

If XDG_RUNTIME_DIR is not available, Novops will issue a warning and try to emulate a XDG-like behavior under a /tmp sub-folder. There's no guarantee it will fully implement XDG specs, but directory is created such as:

- Owned and read/writable only by current user

- By using a

/tmpsub-folder, we reasonably assume content won't persist between reboot and logout

See prepare_working_directory() in src/lib.rs

This may be less secure. Novops will issue a warning in such situation, and you're advised to use a system with XDG_RUNTIME_DIR available.

Limitations

Novops does its best to provide a more secure way of handling secrets, though it's not 100% bullet-proof:

- Using

tmpfsshould use in-memory file system - but secrets may be swapped to disk, which may present a security risk. - Environment variables and files can be read by another process running as the same user running Novops.

- A

rootor equivalent user may be able to access secrets, even if they are in memory or in secure folders.

These are OS limitations, Novops alone can't solve them. Even Hashicorp Vault, which can be seen as a very good security tools, has similar limitations.

How can I make my setup more secure?

- Disable swap. This will prevent secrets in the protected

tmpfsfolder from being swapped to disk. - Disable core dumps. A root user may be able to force core dumps and retrieve secrets from memory.

Overall, Novops is just an added security layer in your security scheme and is limited by surrounding environment and underlying usage. You should always follow security best practices for secret management.

External libraries and CVEs

Novops uses open source libraries and update them regularly to latest version to get security patches and CVE fixes.

CLI reference

- Commands

novops loadnovops runnovops completionnovops schema- Built-in environment variables

- Variables loaded by default

- Examples

Commands

Novops commands:

load- Load a Novops environment. Output resulting environment variables to stdout or to a file is-sis usedrun- Run a command with loaded environment variables and filescompletion- Output completion code for various shellsschema- Output Novops confg JSON schemahelp- Show help and usage

All commands and flags are specified in main.rs

novops load

novops load [OPTIONS]

Load a Novops environment. Output resulting environment variables to stdout or to a file using -s/--symlink.

Intended usage is for redirection with source such as:

source <(novops load)

It's also possible to create a dotenv file in a secure directory and a symlink pointing to it with --symlink/-s:

novops load -s .envrc

source .envrc

Options:

-c, --config <FILE> - Configuration to use. Default: .novops.yml

-e, --env <ENVNAME> - Environment to load. Prompt if not specified.

-s, --symlink <SYMLINK> - Create a symlink pointing to generated environment variable file. Implies -o 'workdir'

-f, --format <FORMAT> - Format for environment variables (see below)

-w, --working-dir <DIR> - Working directory under which files and secrets will be saved. Default to XDG_RUNTIME_DIR if available, or a secured temporary files otherwise. See Security Model for details.

--dry-run - Perform a dry-run: no external service will be called and dummy secrets are generated.

Supported environment variable formats with -f, --format <FORMAT>:

dotenv-exportoutput variables withexportkeywords such asexport FOO='bar'dotenvoutput variables as-is such asFOO='bar'

novops run

novops run [OPTIONS] -- <COMMAND>...

Run a command with loaded environment variables and files. Example:

novops run -- sh

novops run -- terraform apply

Always use -- before your command to avoid OPTIONS being mixed-up with COMMAND. For example, novops run -e prod-rw sh -c "echo hello" would cause Novops to interpret -c as OPTIONS rather than COMMAND. Note that future version of Novops may enforce -- usage, so commands like novops run echo foo may not be valid anymore.

Options:

-c, --config <FILE>- Configuration to use. Default:.novops.yml-e, --env <ENVNAME>- Environment to load. Prompt if not specified.-w, --working-dir <DIR>- Working directory under which files and secrets will be saved. Default toXDG_RUNTIME_DIRif available, or a secured temporary files otherwise. See Security Model for details.--dry-run- Perform a dry-run: no external service will be called and dummy secrets are generated.COMMANDwilll be called with dummy secrets.

novops completion

novops completion <SHELL>

Output completion code for various shells. Examples:

- bash:

source <(novops completion bash) - zsh:

novops completion zsh > _novops && fpath+=($PWD) && compinit

Add output to your $HOME/.<shell>rc file.

novops schema

novops schema

Output Novops config JSON schema.

Built-in environment variables

CLI flags can be specified via environment variables NOVOPS_*:

NOVOPS_CONFIG- global flag-c, --configNOVOPS_ENVIRONMENT- global flag-e, --envNOVOPS_WORKDIR- global flag-w, --working-dirNOVOPS_DRY_RUN- global flag--dry-runNOVOPS_SKIP_WORKDIR_CHECK- global flag--skip-workdir-checkNOVOPS_LOAD_SYMLINK- load subcommand flag-s, --symlinkNOVOPS_LOAD_FORMAT- load subcommand flag-f, --formatNOVOPS_LOAD_SKIP_TTY_CHECK- load subcommand--skip-tty-check

Variables loaded by default

Novops will load some variables by default when running novops [load|run]:

NOVOPS_ENVIRONMENT- Name of the loaded environment

Examples

Override default config path

By default Novops uses .novops.yml to load secrets. Use novops load -c PATH to use another file:

novops load -c /path/to/novops/config.yml

Run a sub-process

Use novops run

novops run -- sh

Use FLAG -- COMMAND... to provide flags:

novops run -e dev -c /tmp/novops.yml -- run terraform apply

Specify environment without prompt

Use novops load -e ENV to load environment without prompting

source <(novops load -e dev)

Use built-in environment variables

Sometime you want to change behavior according to environment variables, such as running Novops on CI.

Use built-in environment variables:

# Set environment variable

# Typically done via CI config or similar

# Using export for example

export NOVOPS_ENVIRONMENT=dev

export NOVOPS_LOAD_SYMLINK=/tmp/.env

# Novops will load dev environment and create /tmp/.env symlink

novops load

Equivalent to

novops load -e dev -s /tmp/.env

Check environment currently loaded by Novops

Novops exposes some variables by default (eg. NOVOPS_ENVIRONMENT). You can use them to perform some specific actions.

Simple example: show loaded environment

novops run -e dev -- sh -c 'echo "Current Novops environment: $NOVOPS_ENVIRONMENT"'

You can leverage NOVOPS_ENVIRONMENT to change behavior on certain environments, such as avoiding destructive action in Prod:

# Failsafe: if current environment is prod or contains 'prod', exit with error

if [[ $NOVOPS_ENVIRONMENT == *"prod"* ]]; then

echo "You can't run this script in production or prod-like environments!"

exit 1

fi

# ... some destructive actions

make destroy-all

NOVOPS_ENVIRONMENT is automayically loaded:

novops run -e prod -- ./destroy-all.sh # Won't work

novops run -e dev -- ./destroy-all.sh # OK

You may instead add a custom MY_APP_ENVIRONMENT on each environment but it's less convenient.

Writing .env to secure directory

Without Novops, you'd write some .env variable file directly to disk and source it. But writing data directly to disk may represent a risk.

novops load -s SYMLINK creates a symlink pointing to secret file stored securely in a tmpfs directory.

# Creates symlink .envrc -> /run/user/1000/novops/myapp/dev/vars

novops load -s .envrc

source .envrc # source it !

cat .envrc

# export HELLO_WORLD='Hello World!'

# export HELLO_FILE='/run/user/1000/novops/myapp/dev/file_...'

Change working directory

Novops uses XDG_RUNTIME_DIR by default as secure working directory for writing files. You can change working directory with novops load -w. No check is performed on written-to directory. Make sure not to expose secrets this way.

novops load -w "$HOME/another/secure/directory"

Dry-run

Mostly used for testing, dry-run will only parse config and generate dummy secrets without reading or writing any actual secret value.

novops load --dry-run

Configuration and modules

Everything you can use within .novops.yml

.novops.ymlconfiguration schema- Files and Variables

- Hashicorp Vault

- Key Value v1/v2

- AWS temporary credentials

- AWS

- Secrets Manager

- STS Assume Role for temporary IAM credentials

- SSM Parameter Store

- Google Cloud

- Secret Manager

- Azure

- Key Vault

- SOPS (Secrets OPerationS)

- BitWarden

.novops.yml configuration schema

novops uses .novops.yml to load secrets. This doc details how this file can be used for various use cases. You can use another config with novops [load|run] -c PATH, though this doc will refer to .novops.yml for config file.

See full .novops.yml schema for all available configurations.

Configuration path precedence

Novops will load configuration in that order:

-cor--configCLI flag if provided.novops.yamlin current directory.novops.ymlin current directory- Fail as no config can be found

Configuration: Environments, Modules, Inputs and Outputs

.novops.yml defines:

- Environments for which secrets can be loaded

- Environments define Inputs (

files,variables,aws...) - Inputs are resolved into Environment Variables and Files (and other Outputs constructs internally with files and variables)

- Inputs can also use other Inputs, such as an Hashicorp Vault

hvault_kv2Inputs used by avariableInput to resolve a secret into an environment variable (see below for example)

Example: environments dev and prod with inputs files, variables and hvault_kv2.

environments:

# Environment name

dev:

# "variables" is a list of "variable" inputs for environment

# Loading these inputs will result in envionment variables outputs

variables:

# - name: environment variable name

# - value: variable value, can be a plain string or another input

- name: MY_APP_HOST

value: "localhost:8080"

# here variable value is another Input resolving to a string

# novops will read the referenced value

# in this case from Hashicorp Vault server

- name: MY_APP_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: password

# "files" is a list of "file" inputs

files:

# - content: input resolving to a string. Can be a plain string or another input resolving to a string

# - variable: a variable name which will point to generated file

# - dest: Optionally, the final destination where file will be generate. By default Novops create a file in a secure directory.

#

# This file input will resolve to two Outputs:

# - A variable MY_APP_CONFIG=/path/to/secure/location

# - A file created in a secure location with content "bind_addr: localhost"

#

- variable: MY_APP_CONFIG

content: |

bind_addr: localhost

# Like variables input, file Input content can use another Input

# to load value from external source

- variable: MY_APP_TOKEN

content:

hvault_kv2:

path: crafteo/app/dev

key: token

Root config keyword

Root config is used to specify global configurations for Novops and its modules:

config:

# novops default configs

default:

# name of environment loaded by default

environment: dev

# Hashivault config

# See Hashivault module doc

hashivault:

# ...

# AWS config

# See AWS module doc

aws:

# ...

# Other module configs may exists

# See module docs or full Novops schema for details

<someModule>:

# ...

Config schema

This file is replaced by doc building process by an HTML and user-friendly version of docs/schema/config-schema.json.

If you see this outside of local development environment, it's a bug, please report it.

Files and Variables

files and variables are primay way to configure Novops

- Each element in

variableswill generate a single environment variable loaded fromvalue - Each element in

fileswill generate a secured temporary file loaded fromcontent

environments:

dev:

# Variables to load

# name and value are required keys

# value can take a plain string or a module

variables:

# Plain string

- name: APP_URL

value: "http://127.0.0.1:8080"

# Use Hashicorp Vault KV2 module to set variable value

- name: APP_PASSWORD

value:

hvault_kv2:

path: crafteo/app/dev

key: password

# Any input resolving to a string value can be used with variable

# See below for available modules

- name: APP_SECRET

value:

<module_name>:

<some_config>: foo

<another_config>: bar

# List of files to load for dev

# Each files must define either dest, variable or both

files:

# A symlink will be created at ./symlink-pointing-to-file, pointing to

# a file in secure Novops working directory which will have content "foo"

- symlink: ./symlink-pointing-to-file

content: foo

# Fille will be generated in a secure folder

# APP_TOKEN variable will point to file

# Such as APP_TOKEN=/run/user/1000/novops/.../file_VAR_NAME

- variable: APP_TOKEN

content:

hvault_kv2:

path: "myapp/dev/creds"

key: "token"

File dest deprecation

dest is deprecated as it may result in file being generated in insecure directory and/or persisted on disk (as file is written directly at provided path, outside of secure Novops working directory). Use symlink instead.

# [...]

files:

# Prefer symlink

- symlink: ./my-secret-token

content:

hvault_kv2:

path: "myapp/dev/creds"

key: "token"

# DON'T DO THIS

- dest: ./my-secret-token # not secure

content:

hvault_kv2:

path: "myapp/dev/creds"

key: "token"

Hashicorp Vault

Authentication & Configuration

The Vault authentication methods AppRole, Kubernetes, JWT are supported.

They are configured in the .novops.yml configuration file.

You can generate the Vault token externally by using the Vault CLI directly as in section using Vault CLI

Example of JWT authentication:

config:

hashivault:

address: http://localhost:8200

auth:

type: JWT

role: novops-project

mount_path: gitlab

The following configuration parameters are required when configuring Vault authentication:

| Parameter | Value | Description |

|---|---|---|

| type | AppRole Kubernetes JWT | Authentication type to use |

| role | The Vault role to inform on Vault login |

AppRole

config:

hashivault:

address: http://localhost:8200

auth:

type: AppRole

role: novops-project

# The role id can also be informed by the environment variable VAULT_AUTH_ROLE_ID

role_id: <uuid>

# The secret id path to read the secret from.

# The secret id can also be informed by the environment variable VAULT_AUTH_SECRET_ID

secret_id_path: /path/to/secret

The environment variable VAULT_AUTH_SECRET_ID can also be used to inform the secret id.

The AppRole can be created without a secret bound to it, in this case the secret id is not required to be informed.

JWT

config:

hashivault:

address: http://localhost:8200

auth:

type: JWT

role: novops-project

mount_path: gitlab

# The path to read the jwt token from.

# The token can be informed by the environment variable VAULT_AUTH_JWT_TOKEN

token_path: /path/to/token

Kubernetes

config:

hashivault:

address: http://localhost:8200

auth:

type: Kubernetes

role: novops-project

mount_path: kubernetes

Using Vault CLI

Authenticating with vault CLI is enough. You can also use environment variables

VAULT_ADDR=https://vault.company.org

VAULT_TOKEN="xxx"

Or specify address or token path in .novops.yml via root config element

config:

hashivault:

address: http://localhost:8200

token_path: /path/to/token

Hashicorp Vault uses tokens for authenticated entities. You can use any authentication method (vault login, web UI/API...) to get a valid token.

Novops will load token in this order:

VAULT_TOKENenvironment variabletoken_pathin.novops.yml- Local file

~/.vault-token(generated by default withvault login)

Generally, VAULT_* environment variables available for vault CLI will also work with Novops.

AWS Secret Engine

AWS Secret Engine generates temporary STS credentials. Maps directly to Generate Credentials API.

Outputs environment variables used by most AWS SDKs and tools:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_SESSION_TOKEN

environments:

dev:

hashivault:

aws:

mount: aws

name: dev_role

role_arn: arn:aws:iam::111122223333:role/dev_role

role_session_name: dev-session

ttl: 2h

Namespaces

To use Vault namespace you can set namespace config such as:

config:

hashivault:

address: http://localhost:8200

namespace: my-namespace/child-ns

Alternatively specify namespace directly in secret path as described in Vault doc.

Key Value v2

Hashicorp Vault Key Value Version 2 with variables and files:

environment:

dev:

variables:

- name: APP_PASSWORD

value:

hvault_kv2:

mount: "secret"

path: "myapp/dev/creds"

key: "password"

files:

- name: SECRET_TOKEN

dest: .token

content:

hvault_kv2:

path: "myapp/dev/creds"

key: "token"

Key Value v1

Hashicorp Vault Key Value Version 1 with variables and files:

environments:

dev:

variables:

- name: APP_PASSWORD

value:

hvault_kv1:

path: app/dev

key: password

mount: kv1 # Override secret engine mount ('secret' by default)

files:

- variable: APP_TOKEN

content:

hvault_kv1:

path: app/dev

key: token

AWS

- Authentication & Configuration

- STS Assume Role

- Systems Manager (SSM) Parameter Store

- Secrets Manager

- S3 file

- Advanced examples

Authentication & Configuration

Authenticating with aws CLI is enough, Novops will use locally available credentials. Specify your AWS credentials as usual (see AWS Programmatic access or Credentials quickstart):

Credentials are loaded in order of priority:

- Environment variables

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY, etc. - Config file

.aws/configand.aws/credentials - Use IAM Role attached from ECS or EC2 instance

You can also use config root element override certains configs (such as AWS endpoint), for example:

config:

# Example global AWS config

# Every field is optional

aws:

# Use a custom endpoint

endpoint: "http://localhost:4566/"

# Set AWS region name

region: eu-central-1

# Set identity cache load timeout.

#

# By default identity load timeout is 5 seconds

# but some custom config may require more than 5 seconds to load identity,

# eg. when prompting user for TOTP.

#

# See Advanced examples below for usage

identity_cache:

load_timeout: 120 # timeout in seconds

STS Assume Role

Generate temporary IAM Role credentials with STS AssumeRole:

Note that aws is an environment sub-key, not a files or variables sub-key as it will output multiple variables AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY, AWS_SESSION_TOKEN and AWS_SESSION_EXPIRATION

environments:

dev:

# Output variables to assume IAM Role:

# AWS_ACCESS_KEY_ID

# AWS_SECRET_ACCESS_KEY

# AWS_SESSION_TOKEN

# AWS_SESSION_EXPIRATION (non built-in AWS variable, Linux timestamp in second specifying token expiration date)

aws:

assume_role:

role_arn: arn:aws:iam::12345678910:role/my_dev_role

source_profile: novops

# Optionally define credential duration in seconds. Default to 3600s (1h)

# duration_seconds: 900

Systems Manager (SSM) Parameter Store

Retrieve key/values from AWS SSM Parameter Store as env variables or files:

environments:

dev:

variables:

- name: MY_SSM_PARAM_STORE_VAR

value:

aws_ssm_parameter:

name: some-param

# with_decryption: true/false

files:

- name: MY_SSM_PARAM_STORE_FILE

content:

aws_ssm_parameter:

name: some-var-in-file

Secrets Manager

Retrieve secrets from AWS Secrets Manager as env var or files:

environments:

dev:

variables:

- name: MY_SECRETSMANAGER_VAR

value:

aws_secret:

id: my-string-secret

files:

- name: MY_SECRETSMANAGER_FILE

content:

aws_secret:

id: my-binary-secret

S3 file

Load S3 objects as files or environment variables:

environments:

dev:

variables:

- name: S3_OBJECT_AS_VAR

value:

aws_s3_object:

bucket: some-bucket

key: path/to/object

files:

- symlink: my-s3-object.json

content:

aws_s3_object:

bucket: some-bucket

key: path/to/object.json

It's also possible to specify the region in which Bucket is located if different than configured region:

aws_s3_object:

bucket: some-bucket

key: path/to/object

region: eu-central-1

Advanced examples

Using credential_process with TOTP or other user prompt

In some scenario you might want to use credential_process in your config, such as [aws-vault], which may ask for TOTP or other user prompts.

For example, using ~/.aws/config such as:

[profile crafteo]

credential_process = aws-vault export --format=json crafteo

mfa_serial = arn:aws:iam::0123456789:mfa/my-mfa

Credential processor prompts user for TOTP but by default AWS SDK timeout after a few seconds - not enough time to enter data. You can configure identity cache load timeout to give enough time to user. In .novops.yml, set config such as:

config:

aws:

identity_cache:

load_timeout: 120 # Give user 2 min to enter TOTP

Google Cloud

Authentication

Authenticating with gcloud CLI is enough. Otherwise, provide credentials using Application Default Credentials:

- Set

GOOGLE_APPLICATION_CREDENTIALSto a credential JSON file - Setup creds using

gcloudCLI - Attached service account using VM metadata server to get credentials

Secret Manager

Retrieve secrets from GCloud Secret Manager as env var or files:

environments:

dev:

variables:

- name: SECRETMANAGER_VAR_STRING

value:

gcloud_secret:

name: projects/my-project/secrets/SomeSecret/versions/latest

# validate_crc32c: true

files:

- name: SECRETMANAGER_VAR_FILE

content:

gcloud_secret:

name: projects/my-project/secrets/SomeSecret/versions/latest

Microsoft Azure

Authentication

Login with az CLI is enough. Novops use azure_identity DefaultAzureCredential. Provide credentials via:

Key Vault

Retrieve secrets from Key Vaults as files or variables:

environments:

dev:

variables:

- name: AZ_KEYVAULT_SECRET_VAR

value:

azure_keyvault_secret:

vault: my-vault

name: some-secret

files:

- name: AZ_KEYVAULT_SECRET_FILE

content:

azure_keyvault_secret:

vault: my-vault

name: some-secret

version: 1234118a41364a9e8a086e76c43629e4

SOPS (Secrets OPerationS)

Load SOPS encryped values as files or environment variables.

Example below consider example files:

# clear text for path/to/encrypted.yml

nested:

data:

nestedKey: nestedValue

# clear text for path/to/encrypted-dotenv.yml

APP_TOKEN: secret

APP_PASSWORD: xxx

Requirements

You need sops CLI available locally as Novops will wrap calls to sops --decrypt under the hood.

All SOPS decryptions methods are supported as would be done using CLI command sops --decrypt. See SOPS official doc for details.

Load a single value

Extract a single value as environment variable or file.

environments:

dev:

variables:

# Load a single SOPS nested key as environment variable

# Equivalent of `sops --decrypt --extract '["nested"]["data"]["nestedKey"]' path/to/encrypted.yml`

- name: SOPS_VALUE

value:

sops:

file: path/to/encrypted.yml

extract: '["nested"]["data"]["nestedKey"]'

# YOU PROBABLY DON'T WANT THAT

# Without 'extract', SOPS entire file content is set as environment variable

# Instead, use environment top-level key sops

# - name: SOPS_ENTIRE_FILE

# value:

# sops:

# file: path/to/encrypted.yml

files:

# Load SOPS decrypted content into secure temporary file

# SOPS_DECRYPTED would point to decrypted file content such as SOPS_DECRYPTED=/run/...

# Equivalent of `sops --decrypt path/to/encrypted.yml`

- variable: SOPS_DECRYPTED

content:

sops:

file: path/to/encrypted.yml

Load entire file as dotenv

Load entire SOPS file(s) as dotenv environment variables:

environments:

dev:

# This is a direct sub-key of environment name

# Not a sub-key of files or variables

sops_dotenv:

# Use plain file content as dotenv values

- file: path/to/encrypted-dotenv.yml

# Use a nested key as dotenv values

- file: path/to/encrypted.yml

extract: '["nested"]["data"]'

Note: SOPS won't be able to decrypt complex or nested values (this is a SOPS limitation). Only dotenv-compatible files or file parts with extract can be used this way.

Pass additional flags to SOPS

By default Novops will load SOPS secrets using sops CLI such as sops --decrypt [FILE]. It's possible to pass additional flags with additional_flags.

Warning: it may break Novops loading mechanism if output is not as expected by Novops. Only use this if an equivalent feature is not already provided by a module option. Feel free to create an issue or contribute to add missing feature !

Example: enable SOPS verbose output

environments:

dev:

variables:

- name: SOPS_VALUE_WITH_ADDITIONAL_FLAGS

value:

sops:

file: path/to/encrypted.yml

extract: '["nested"]["data"]["nestedKey"]'

additional_flags: [ "--verbose" ]

Novops debug logging will show sops stderr (stout is not shown to avoid secret leak):

RUST_LOG=novops=debug novops load

BitWarden

Authentication & Configuration

To use BitWarden module:

- Ensure BitWarden CLI

bwis available in the same contextnovopsruns in - Set environment variable

BW_SESSION_TOKEN

environments:

dev:

files:

- variable: PRIVATE_SSH_KEY

content:

bitwarden:

# Name of the entry to load

entry: Some SSH Key entry

# Field to read from BitWarden objects. Maps directly to JSON field from 'bw get item' command

# See below for details

field: notes

Novops will load items using bw get item as JSON. field must be set to expected field. Separate sub-field with .. Examples:

- Secure Note item

field: notes - Login item

field: login.username field: login.password field: login.totp - Identity item:

field: identity.title field: identity.firstName # field: identity.xxx - Card item:

field: card.cardholderName field: card.number field: card.expMonth field: card.expYear field: card.code field: card.brand

To get full output from BitWarden, use bw getor bw get template

Examples and Use Cases

CI/CD:

Infrastructure as Code:

Shell usage examples (sh, bash, zsh...)

Source into current shell

Source into your shell

# bash

source <(novops load)

# zsh / ksh

source =(novops load)

# dash

novops load -s .envrc

. ./.envrc

# fish

source (novops load | psub)

You can also create an alias such as

alias nload="source <(novops load)"

Run sub-process

Run a sub-process or command loaded with environment variables:

# Run terraform apply

novops run -- terraform apply

# Run a sub-shell

novops run -- sh

This will ensure secrets are only exists in memory for as long as command run.

Create dotenv file in protected directory with symlink

Load secrets and create a .env -> /run/user/1000/novops/.../vars symlink pointing to dotenv file sourceable into your environment.

novops load -s .envrc

# .env is a symlink

# There's no risk commiting to Git

# Source it !

source .env

Docker & Podman

Run containers

Load environment variables directly into containers:

docker run -it --env-file <(novops load -f dotenv -e dev) alpine sh

podman run -it --env-file <(novops load -f dotenv -e dev) alpine sh

novops load -f dotenv generates an env file output compatible with Docker and Podman.

Compose

Use Docker Compose, podman-compose or another tool compatible with Compose Spec

Generate a .env file

novops load -s .env

And use it on Compose file

services:

web:

image: 'webapp:v1.5'

env_file: .env

Build images

Include novops in your Dockerfile such a:

# Multi-stage build to copy novops binary from existing image

FROM crafteo/novops:0.7.0 AS novops

# Final image where novops is copied

FROM alpine AS app

COPY --from=novops /novops /usr/local/bin/novops

Nix

Setup a development shell with Nix Flakes.

Add Novops as input:

inputs = {

novops.url = "github:PierreBeucher/novops";

};

And then include Novops package wherever needed.

Example flake.nix:

{

inputs = {

novops.url = "github:PierreBeucher/novops";

};

outputs = { self, nixpkgs, novops }: {

devShells."x86_64-linux".default = nixpkgs.legacyPackages."x86_64-linux".mkShell {

packages = [

novops.packages."x86_64-linux".novops

];

shellHook = ''

# Run novops on shell startup

source <(novops load)

'';

};

};

}

A more complete Flake using flake-utils:

{

description = "Example Flake using Novops";

inputs = {

novops.url = "github:PierreBeucher/novops"; # Add novops input

flake-utils.url = "github:numtide/flake-utils";

};

outputs = { self, nixpkgs, novops, flake-utils }:

flake-utils.lib.eachDefaultSystem (system:

let

pkgs = nixpkgs.legacyPackages.${system};

novopsPackage = novops.packages.${system}.novops;

in {

devShells = {

default = pkgs.mkShell {

packages = [

novopsPackage # Include Novops package in your shell

];

shellHook = ''

# Run novops on shell startup

source <(novops load)

'';

};

};

}

);

}

Novops can be used on various CI/CD platforms through container images or shell.

GitLab CI

GitLab uses YAMl to define jobs. You can either:

Use a Docker image packaging Novops

See Docker examples to build a container image packaging Novops, then use it in on CI such as:

job-with-novops:

image: your-image-with-novops

stage: test

script:

# Load config

# Specify environment to avoid input prompt

- source <(novops load -e dev)

# Environment is now loaded!

# Run others commands...

- terraform ...

Install novops on-the-fly

This method is not recommended. Prefer using an image packaging Novops to avoid unnecessary network load.

You can download novops binary on the fly:

job-with-novops:

image: hashicorp/terraform:light

stage: test

script:

# Download novops

- |-

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

mv novops /usr/local/bin/novops

# Load config

# Specify environment to avoid input prompt

- source <(novops load -e dev)

# Environment is now loaded!

# Run others commands...

- terraform ...

Alternatively, set a specific version:

job-with-novops:

# ...

variables:

NOVOPS_VERSION: "0.6.0"

script:

# Download novops

- |-

curl -L "https://github.com/PierreBeucher/novops/releases/download/v${NOVOPS_VERSION}/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

mv novops /usr/local/bin/novops

Authenticating to external provider on CI

GitLab provides facility to authenticate with external party services via OIDC tokens. You can leverage it to authenticate on Hashicorp Vault, AWS, or another provider before.

Alternatively, you can use CI environment variables to authenticate directly (see module Authentication docs for details)

More examples will be provided soon.

GitHub Action

Considering your repository has a .novops.yml at root, configure a job such as:

jobs:

job_with_novops_load:

name: run Novops on GitHub Action job

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: setup Novops

run: |

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

mv novops /usr/local/bin/novops

- name: run Novops

run: |

novops load -s .envrc -e dev

cat .envrc >> "$GITHUB_ENV"

- name: a step with loaded novops environment

run: env | grep MY_APP_HOST

Novops loaded values are appended to $GITHUB_ENV file as documented in Setting environment variables. This allow novops values to be passed across job's steps.

Alternatively, set a specific version:

- name: setup Novops

env:

NOVOPS_VERSION: 0.6.0

run: |

curl -L "https://github.com/PierreBeucher/novops/releases/download/v${NOVOPS_VERSION}/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

sudo mv novops /usr/local/bin/novops

Note: roadmap includes a GitHub action to ease setup

Jenkins

Use a Docker image packaging Novops

See Docker integration to build a Docker image packaging Novops, then use it in Jenkinsfile such as:

agent {

docker {

image 'your-image-with-novops'

}

}

stage('Novops') {

sh '''

source <(novops load -e dev)

'''

}

Install novops on-the-fly

This method is not recommended. Prefer using an image packaging Novops to avoid unnecessary network load.

Setup a step such as:

stage('Novops') {

sh '''

curl -L "https://github.com/PierreBeucher/novops/releases/latest/download/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

sudo mv novops /usr/local/bin/novops

source <(novops load -e dev)

'''

}

Alternatively, setup a specific version:

environment {

NOVOPS_VERSION=0.6.0

}

stage('Novops') {

sh '''

curl -L "https://github.com/PierreBeucher/novops/releases/download/v${NOVOPS_VERSION}/novops-X64-Linux.zip" -o novops.zip

unzip novops.zip

mv novops /usr/local/bin/novops

source <(novops load -e dev)

'''

}

Novops with Infrastructure as Code tools

Leverage built-in environment variables for various tools to help automate and pass secrets safely to processes.

Ansible

Leverage Ansible built-in environments variables to setup your environments, e.g:

ANSIBLE_PRIVATE_KEY_FILE- SSH key used to connect on managed hostsANSIBLE_VAULT_PASSWORD_FILE- Path to Ansible vault passwordANSIBLE_INVENTORY- Inventory to use

Your workflow will look like

# Inventory, vault passphrase and SSH keys

# are set by environment variables

novops run -- ansible-playbook my-playbook.yml

Use a .novops.yml such as:

environments:

dev:

variables:

# Comma separated list of Ansible inventory sources

# Ansible will automatically use these inventories

- name: ANSIBLE_INVENTORY

value: inventories/dev

# Add more as needed

# - name: ANSIBLE_*

# value: ...

files:

# Ansible will use this key to connect via SSH on managed hosts

- variable: ANSIBLE_PRIVATE_KEY_FILE

content:

hvault_kv2:

path: myapp/dev

key: ssh_key

# Ansible use this file to decrypt local Ansible vault

- variable: ANSIBLE_VAULT_PASSWORD_FILE

content:

hvault_kv2:

path: myapp/dev

key: inventory_password

# Another environment

prod:

variables:

- name: ANSIBLE_INVENTORY

value: inventories/prod

files:

- variable: ANSIBLE_PRIVATE_KEY_FILE

content:

hvault_kv2:

path: myapp/prod

key: ssh_key

- variable: ANSIBLE_VAULT_PASSWORD_FILE

content:

hvault_kv2:

path: myapp/prod

key: inventory_password

Terraform

Leverage Terraform built-in environment variables to setup your environments, e.g:

TF_WORKSPACE- Set workspace per environmentTF_VAR_name- Set Terraform variablenamevia environment variablesTF_CLI_ARGSandTF_CLI_ARGS_name- Specify additional CLI arguments

Your workflow will then look like:

source <(novops load)

# No need to set workspace or custom variables

# They've all been loaded as environment variables and files

terraform plan

terraform apply

Use a .novops.yml such as:

environments:

dev:

variables:

# Set workspace instead of running 'terraform workspace select (workspace]' manually

- name: TF_WORKSPACE

value: dev_workspace

# Set environment config file and other environment specific argument using TF_CLI_ARGS_*

- name: TF_CLI_ARGS_var-file

value: dev.tfvars

- name: TF_CLI_ARGS_input

value: false

# - name: TF_CLI_ARGS_xxx

# value: foo

# Use TF_VAR_* to set declared variables

# Such as loading a secret variable

- name: TF_VAR_database_password

value:

hvault_kv2:

path: myapp/dev

key: db_password

# - name: TF_VAR_[varname]

# value: ...

files:

# Terraform CLI configuration file for dev environment

- variable: TF_CLI_CONFIG_FILE

content: |

...

# Production environment

prod:

variables:

- name: TF_WORKSPACE

value: prod_workspace

- name: TF_CLI_ARGS_var-file

value: prod.tfvars

- name: TF_VAR_database_password

value:

hvault_kv2:

path: myapp/prod

key: db_password

files:

- variable: TF_CLI_CONFIG_FILE

content: |

...

Pulumi

Leverage Pulumi built-in environment variables to setup your environments, e.g:

PULUMI_CONFIG_PASSPHRASEandPULUMI_CONFIG_PASSPHRASE_FILE- specify passphrase to decrypt secretsPULUMI_ACCESS_TOKEN- Secret token used to authenticate with Pulumi backendPULUMI_BACKEND_URL- Specify Pulumi backend URL, useful with self-managed backends changing with environments

Your workflow will look like:

# Access token, config passphrase and backend URL

# are set by environment variables

novops run -- pulumi up -s $PULUMI_STACK -ryf

- Stack passwords

- Stack name per environment

- Pulumi Cloud Backend authentication

- Custom Pulumi backend

Stack passwords

Pulumi protect stack with passphrase. Use PULUMI_CONFIG_PASSPHRASE or PULUMI_CONFIG_PASSPHRASE_FILE variable to provide passphrase.

environments:

dev:

# Use a variable

variables:

- name: PULUMI_CONFIG_PASSPHRASE

value:

hvault_kv2:

path: myapp/dev

key: pulumi_passphrase

# Or a file

files:

- variable: PULUMI_CONFIG_PASSPHRASE_FILE

content:

hvault_kv2:

path: myapp/dev

key: pulumi_passphrase

Stack name per environment

Pulumi does not provide a built-in PULUMI_STACK variable but you can still use it with pulumi -s $PULUMI_STACK. See #13550

environments:

dev:

variables:

- name: PULUMI_STACK

value: dev

prod:

variables:

- name: PULUMI_STACK

value: prod

Pulumi Cloud Backend authentication

Pulumi PULUMI_ACCESS_TOKEN built-in variable can be used to authenticate with Pulumi Cloud Backend.

environments:

dev:

variables:

- name: PULUMI_ACCESS_TOKEN

value:

hvault_kv2:

path: myapp/dev

key: pulumi_access_token

prod:

variables:

- name: PULUMI_ACCESS_TOKEN

value:

hvault_kv2:

path: myapp/prod

key: pulumi_access_token

Custom Pulumi backend

Pulumi can be used with self-managed backends (AWS S3, Azure Blob Storage, Google Cloud storage, Local Filesystem).

Use PULUMI_BACKEND_URL to switch backend between environments and provide properly scoped auhentication. Example for AWS S3 Backend:

environments:

dev:

variables:

- name: PULUMI_BACKEND_URL

value: "s3://dev-pulumi-backend"

# Optionally, impersonate a dedicated IAM Role for your environment

aws:

assume_role:

role_arn: arn:aws:iam::12345678910:role/app_dev_deployment

prod:

variables:

- name: PULUMI_BACKEND_URL

value: "s3://prod-pulumi-backend"

aws:

assume_role:

role_arn: arn:aws:iam::12345678910:role/app_prod_deployment

Advanced

Debugging and log verbosity

novops is a Rust compiled binary. You can use environment variable to set logging level and enable tracing:

# Set debug level for all rust modules

export RUST_LOG=debug # or other level :info, warn, error

# Enable debug for novops only

export RUST_LOG=novops=debug

Show stack traces on error:

export RUST_BACKTRACE=1

# or

export RUST_BACKTRACE=full

See Rust Logging configuration and Rust Error Handling.

Internal architecture: Inputs, Outputs and resolving

Novops relies around the following concepts:

Modules, Inputs, resolving & Outputs

Inputs are set in .novops.yml to describe how to load value. They usually reference an external secret/config provider or a clear-text value.

Modules are responsible for generating outputs from inputs by resolving them. For example, hvault_kv2 module load secrets from Hashicorp Vault KV2 engine:

# hvault_k2 input: reference secrets to load

hvault_kv2:

path: myapp/creds

key: password

Outputs are objects based obtained from Inputs when they are resolved. Currently only 2 types of Output exists:

- Files

- Environment variables (as a sourceable file)

hvault_kv2 example would output a String value such as

myPassw0rd

Inputs can be combined with one-another, for example this .novops.yml config is a combination of Inputs:

environments:

# Each environment is a complex Input

# Providing both Files and Variable outputs

dev:

# variables is itself an Inputs containing a list of others Inputs

# Each Variables Inputs MUST resolve to a String

variables:

# variable input and it's value

# It can be a plain string or any Input resolving to a string

- name: APP_PASS

value:

hvault_kv2:

path: myapp/creds

key: password

- name: APP_HOST

value: localhost:8080

# files is an Inputs containing a list of other Inputs

# Each file input within resolve to two outputs:

# - A file output: content and path

# - A variable output: path to generated file

files:

- name: APP_TOKEN

# Content takes an Input which must resolve to a string or binary content

content:

hvault_kv2:

path: myapp/creds

key: api_token

When running, novops load will:

- Read config file and parse all Inputs

- Resolve all Inputs to their concrete values (i.e. generate Outputs from Inputs)

- Export all Outputs to system (i.e. write file and provide environment variable values)

Resolving mechanism is based on ResolveTo trait implemented for each Input. An example implementation for HashiVaultKeyValueV2 into a String can be:

#![allow(unused)] fn main() { // Example dummy implementation resolving HashiVaultKeyValueV2 as a String impl ResolveTo<String> for HashiVaultKeyValueV2 { async fn resolve(&self, _: &NovopsContext) -> Result<String, anyhow::Error> { let vault_client = build_vault_client(ctx); return Ok( vault_client.kv2.read(&self.mount, &self.path, &self.key).unwrap().to_string() ) } } }

See src/core.rs for details.

Novops config schema and internal structure

Novops config is generated directly from internal Rust structure of Inputs deriving JsonSchema from the root struct core::NovopsConfigFile

For instance:

#![allow(unused)] fn main() { #[derive(/* ... */ JsonSchema)] pub struct NovopsConfigFile { pub environments: HashMap<String, NovopsEnvironmentInput>, pub config: Option<NovopsConfig> } }

Define top-level Novops config schema:

environments:

dev: # ...

prod: # ...

config: # ...

Top level structure of config (leaf are plain values):

graph LR;

name;

environments --> variables

variables --> varName("name")

variables --> varValue("value")

varValue --> anyStringInputVar("<i>any String-resolvable input</i>")

environments --> files

files --> fileName(name)

files --> fileVar(variable)

files --> fileContent(content)

environments --> aws

aws --> awsmoduleinput(<i>AWS module input...</i>)

environments --> otherModule

otherModule("<i>Other module name...</i>") --> otherModuleInput(<i>Other module input...</i>)

fileContent --> anyStringInputFile("<i>any String-resolvable input</i>")

config --> default

config --> hvaultconfig(hashivault)

config --> othermodconf2(Other modules config...)

Contributing

Thank you for your interest in contributing ! To get started you can check Novops internal architecture and:

Development guide

Every command below must be run under Nix Flake development shell:

nix develop

All commands are CI-agnostic: they work the same locally and on CI by leveraging Nix and cache-reuse. If it works locally, it will work on CI.

Build

For quick feedback, just run

cargo build

cargo build -j 6

Novops is built for multiple platforms using cross:

task build-cross

For Darwin (macOS), you must build Darwin Cross image yourself (Apple does not allow distribution of macOS SDK required for cross-compilation, but you can download it yourself and package Cross image):

- Download XCode (see also here)

- Follow osxcross instructions to package macOS SDK

- At time of writing this doc, latest supported version of XCode with osxcross was 14.1 (SDK 13.0)

- Use [https://github.com/cross-rs/cross] and cross-toolchains to build your image from Darwin Dockerfile

- For example:

# Clone repo and submodules git clone https://github.com/cross-rs/cross cd cross git submodule update --init --remote # Copy SDK to have it available in build context cd docker mkdir ./macos-sdk cp path/to/sdk/MacOSX13.0.sdk.tar.xz ./macos-sdk/MacOSX13.0.sdk.tar.xz cargo build-docker-image x86_64-apple-darwin-cross --tag local \ --build-arg MACOS_SDK_DIR=./macos-sdk \ --build-arg MACOS_SDK_FILE="MacOSX13.0.sdk.tar.xz" cargo build-docker-image aarch64-apple-darwin-cross --tag local \ --build-arg MACOS_SDK_DIR=./macos-sdk \ --build-arg MACOS_SDK_FILE="MacOSX13.0.sdk.tar.xz" # Or use older direct docker build commands: # docker build -f ./cross-toolchains/docker/Dockerfile.x86_64-apple-darwin-cross \ # --build-arg MACOS_SDK_DIR=./macos-sdk \ # --build-arg MACOS_SDK_FILE="MacOSX13.0.sdk.tar.xz" \ # -t x86_64-apple-darwin-cross:local . # docker build -f ./cross-toolchains/docker/Dockerfile.aarch64-apple-darwin-cross \ # --build-arg MACOS_SDK_DIR=./macos-sdk \ # --build-arg MACOS_SDK_FILE="MacOSX13.0.sdk.tar.xz" \ # -t aarch64-apple-darwin-cross:local \ # .

- For example:

Test

Tests are run on CI using procedure described below. It's possible to run them locally as well under a nix develop shell.

Running non-integration tests

These tests dot not require anything special and can be run as-is:

task test-doc

task test-clippy

task test-cli

task test-install

Runnning integration tests

Requirements:

- Running a

nix developshell - Azure account

- GCP account

Integration tests run with real services, preferrably in containers or using dedicated Cloud account:

- AWS: LocalStack container (AWS emulated in a container)

- Hashicorp Vault: Vault container

- Google Cloud: GCP account

- Azure: Azure account

Integration test setup is fully automated but may create real Cloud resources. Run:

task test-setup

See tests/setup/pulumi.

Remember to task teardown after running integration tests. Cost should be negligible if you teardown infrastructure right after running tests. Cost should still be negligible even if you forget to teardown as only free or cheap resources are deployed, but better to do it anyway.

# Setup containers and infrastructure and run all tests

# Only needed once to setup infra

# See Taskfile.yml for details and fine-grained tasks

task test-setup

# Run tests

task test-integ

# Cleanup resources to avoid unnecessary cost

task test-teardown

Maintenance and updates

- Update Cargo deps

cargo update - Update Nix flake to latest version and run

nix flake update - Update Containerfile version to match Nix flake

rustc --version - Update test images in

tests/install/test-install.shto match latest version - used fixed image version, notlatest

Doc

Doc is built with mdBook and JSON Schema generated from schemars.

Doc is published from main branch by CI

# Build doc

task doc

# Serve at locahost:3000

tasl doc-serve

Release

release-please should create/update Release PRs automatically on main changes. After merge, release tag and artifacts must be created locally:

Run cross Nix shell

nix develop .#cross

Create release

# GITHUB_TOKEN must be set with read/write permissions

# on Contents and Pull requests

export GITHUB_TOKEN=xxx

git checkout <release_commit_sha>

# git checkout main && git pull right after merge should be OK

hack/create-release.sh

Notes:

- Release may take some time as it will cross-build all Novops binaries before running

release-please - MacOS build image must be available locally (see Build above)

Guide: implementing a module

Thanks for your interest in contributing ! Before implementing a module you may want to understand Novops architecture.

- Overview

- 1. Input and Output

- 2. Implement loading logic with

core::ResolveTo<E> - 3. Integrate module to

core - 4. (Optional) Global configuration

- Testing

Overview

A few modules already exists from which you can take inspiration. This guide uses Hashicorp Vault Key Value v2 hvault_kv2 as example.

You can follow this checklist (I follow and update this checklist myself when adding new modules):

- Define Input(s) and Output(s)

-

Implement loading logic with

core::ResolveTo<E> -

Integrate module to

core - Optionally, define global config for module

1. Input and Output

Create src/modules/hashivault/kv2.rs and add module entry in src/modules/hashivault/mod.rs. Then define Input and Output struct for modules. Each struct needs a few derive as shown below.

A main struct must contain a single field matching YAML key to be used as variable value or file content:

#![allow(unused)] fn main() { /// src/modules/hashivault/kv2.rs #[derive(Debug, Deserialize, Clone, PartialEq, JsonSchema)] pub struct HashiVaultKeyValueV2Input { hvault_kv2: HashiVaultKeyValueV2 } }

Main struct references a more complex struct with our module's usage interface. Again, each field matches YAML keys provided to end user:

#![allow(unused)] fn main() { /// src/modules/hashivault/kv2.rs #[derive(Debug, Deserialize, Clone, PartialEq, JsonSchema)] pub struct HashiVaultKeyValueV2 { /// KV v2 mount point /// /// default to "secret/" pub mount: Option<String>, /// Path to secret pub path: String, /// Secret key to retrieve pub key: String } }

2. Implement loading logic with core::ResolveTo<E>

ResolveTo<E> trait defines how our module is supposed to load secrets. In other words, how are Inputs supposed to be converted to Outputs. Most of the time, ResolveTo<String> is used as we want to use it as environment variables or files content.

#![allow(unused)] fn main() { /// src/modules/hashivault/kv2.rs #[async_trait] impl ResolveTo<String> for HashiVaultKeyValueV2Input { async fn resolve(&self, ctx: &NovopsContext) -> Result<String, anyhow::Error> { let client = get_client(ctx)?; let result = client.kv2_read( &self.hvault_kv2.mount, &self.hvault_kv2.path, &self.hvault_kv2.key ).await?; Ok(result) } } }

Note arguments self and ctx:

selfis used to pass module argument from YAMl Config. For instance:

Is used as:hvault_kv2: path: app/dev key: db_pass#![allow(unused)] fn main() { &self.hvault_kv2.path &self.hvault_kv2.key }ctxis global Novops context, including current environment and entire.novops.ymlconfig file. We used it above to create Hashicorp Vault client from globalconfigelement (see below).

3. Integrate module to core

src/core.rs defines main Novops struct and the config file hierarchy, e.g:

NovopsConfigFile- Config file format withenvironments: NovopsEnvironmentsfieldNovopsEnvironmentsandNovopsEnvironmentInputwithvariables: Vec<VariableInput>fieldVariableInputwithvalue: StringResolvableInputfieldStringResolvableInputis an enum with all Inputs resolving to String

All of this allowing for YAML config such as:

environments: # NovopsEnvironments

dev: # NovopsEnvironmentInput

variables: # Vec<VariableInput>

# VariableInput

- name: FOO

value: bar # StringResolvableInput is an enum for which String and complex value can be used

# VariableInput

- name: HV

value: # Let's add HashiVaultKeyValueV2Input !

hvault_kv2:

path: app/dev

key: db_pass

Add HashiVaultKeyValueV2Input to StringResolvableInput and impl ResolveTo<String> for StringResolvableInput:

#![allow(unused)] fn main() { /// src/core.rs pub enum StringResolvableInput { // ... HashiVaultKeyValueV2Input(HashiVaultKeyValueV2Input), } // ... impl ResolveTo<String> for StringResolvableInput { async fn resolve(&self, ctx: &NovopsContext) -> Result<String, anyhow::Error> { return match self { // ... StringResolvableInput::HashiVaultKeyValueV2Input(hv) => hv.resolve(ctx).await, } } } }

This will make module usable as value with variables and content with files.

4. (Optional) Global configuration

.novops.yml config also have a root config keyword used for global configuration derived from NovopsConfig in src/core.rs.

To add a global configuration, create a struct HashivaultConfig:

#![allow(unused)] fn main() { /// src/modules/hashivault/config.rs #[derive(Debug, Deserialize, Clone, PartialEq, JsonSchema)] pub struct HashivaultConfig { /// Address in form http(s)://HOST:PORT /// /// Example: https://vault.mycompany.org:8200 pub address: Option<String>, /// Vault token as plain string /// /// Use for testing only. DO NOT COMMIT NOVOPS CONFIG WITH THIS SET. /// pub token: Option<String>, /// Vault token path. /// /// Example: /var/secrets/vault-token pub token_path: Option<PathBuf>, /// Whether to enable TLS verify (true by default) pub verify: Option<bool> } }

And add it to struct NovopsConfig:

#![allow(unused)] fn main() { /// src/core.rs #[derive(Debug, Deserialize, Clone, PartialEq, JsonSchema)] pub struct NovopsConfig { // ... pub hashivault: Option<HashivaultConfig> } }

Structure content will now be passed to ResolveTo<E> via ctx and can be used to define module behaviour globally:

#![allow(unused)] fn main() { impl ResolveTo<String> for HashiVaultKeyValueV2Input { async fn resolve(&self, ctx: &NovopsContext) -> Result<String, anyhow::Error> { // create client for specified address let client = get_client(ctx)?; // ... } } }

Testing

Tests are implemented under tests/test_<module_name>.rs.

Most tests are integration tests using Docker containers for external system and a dedicated .novops.<MODULE>.yml file with related config.

If you depends on external component (such as Hashivault instance), use Docker container to spin-up a container and configure it accordingly. See tests/docker-compose.yml